Research Areas

Reinforcement learning (RL) applied to control of complex dynamic systems

Reinforcement Learning (RL) represents a class of machine learning problems where an agent explores, interacts with, and learns to operate in a dynamic, often complex, and uncertain environment. Typically, the agent observes its environment, takes an action in the environment, and observes the change in the environment, and the reward it receives as a consequence of this change. This enables the agent to study the consequences of its actions, and by going through repeated cycles of observation-action-reward the agent develops a policy function that indicates how to perform effective actions that maximize its long-term rewards. In our work, we have applied RL methods for control optimization in complex, dynamic systems.

Application areas include energy optimization for large buildings, optimal fuel transfer in aircraft systems, and fault-tolerant control of unmanned aerial vehicles.

Energy Optimization in Large Buildings

Optimizing Energy and Comfort in HVAC system

Energy-efficient control of Heating, Ventilation, and Air Conditioning (HVAC) systems is an important aspect of building operations because they account for the major share of energy consumed by buildings. Smart buildings enable us to leverage the rich history of data available via the Building Automation System to understand the building energy dynamics and devise efficient HVAC control logic to minimize energy consumption and maximize comfort. These control logics can be developed offline using an array of techniques where the overall goal is to maximize an objective function that reflects the energy savings and comfort. Our lab has adopted the Deep Reinforcement Learning approach to develop and test these techniques on data from real buildings on the university campus as well as standard building benchmarks in the Buildings Library from LBNL.

Deep Reinforcement Learning Methods for Non-Stationary Dynamic Systems

Most real-world systems undergo changes that cause their dynamic model to change over time. In other words, these systems exhibit non-stationary behaviors. Non-stationary behaviors may happen because the components of a system degrade, and/or the environment features in which such system operates change. For example, in the case of large buildings, weather conditions or room occupancy may change abruptly, and unexpected/unanticipated faults in building components may occur. Learning deep reinforcement learning controllers from non-stationary MDPs is particularly difficult for non-episodic tasks because the agent is unable to explore the time axis at will as it experiences distributional shifts. At the MACS lab, we are working on creating optimal controllers able to adapt to non-stationary systems.

To develop a Reinforcement Learning controller that can work on non-stationary systems, we propose a relearning approach where the controller can be adapted as the system behavior changes both continuously and discretely. This relearning approach is demonstrated using two ways: a periodic update and a trigger-based update. In the periodic update, the reinforcement learning controller is allowed to retrain on the latest behavior at regular intervals irrespective of whether there were minor or significant changes in the system behavior. Under a trigger-based update, as the controller is deployed, the reward signal is continuously monitored. The reward signal calculates a metric that quantifies how well the controller is performing on the system. An optimal performance would imply a reward signal that is stationary during the duration of the deployment. If a statistically meaningful change appears in the signal, it implies that the controller is not performing optimally owing to the changing behavior of the system. This will trigger the proposed approach to start relearning the reinforcement learning controller on the latest behavior of the system. This way we solve the problem of continuous adaptation in a Non-Stationary System.

Fault-tolerant control

Fault-tolerant control (FTC) represents a cost-effective methodology to maintain operations of a system in conditions where components of the system may be degrading or when faults occur in the system. A key notion addressed by fault-tolerant control is to modify the control functions to ensure that the system performance does not degrade significantly or the system does not completely fail during operations, which could result in catastrophic scenarios. FTC represents a cost-effective solution, especially in contrast to fail-safe control where additional redundancies are built into a system to maintain performance in case of faults.

Our earlier research on FTC or fault-adaptive control was model-based. We combined model-predictive control (MPC) methods with fault detection, isolation, and identification with MPC algorithms that adjusted to faults by updating the system model to accommodate the faulty situation. In recent work, have developed control strategies using reinforcement learning (RL) for incipient and abrupt faults in hybrid systems that exhibit continuous behaviors interspersed with discrete changes when components in the system turn on and off.

RL Methods for FTC with incipient and abrupt faults

Continued RL is inherently adaptive. As the environment of a controller changes, the feedback signals change, and the controller attempts to derive the new optimal strategy. We apply this paradigm to fault tolerance. The environment is susceptible to sudden, discontinuous disturbances (abrupt faults), and gradual continuous changes (incipient faults).

Results and Current Research

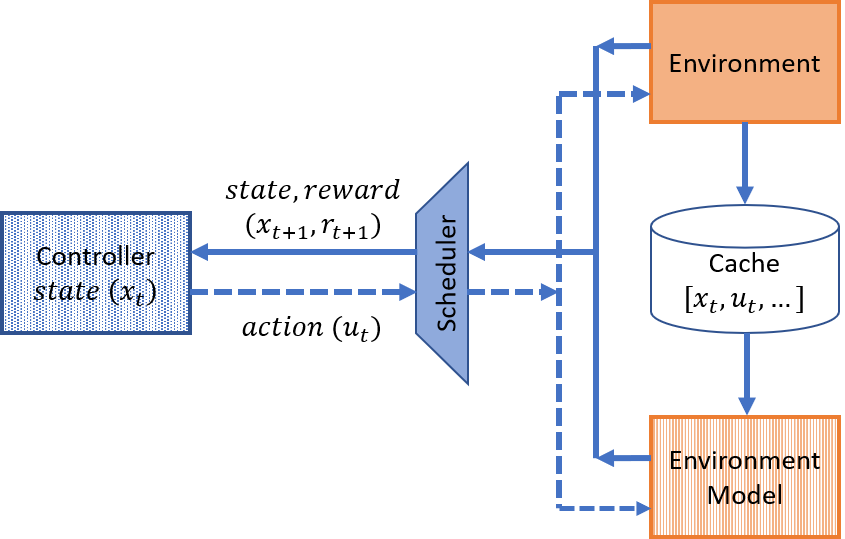

We are currently using data-driven approaches to FTC using RL. RL is heavily dependent on data. Furthermore relying on stochastic optimization algorithms makes it slow to converge. We are researching supplementing sample inefficiency of RL and convergence of the RL process. Our current work involves generating data-driven models of an environment with which an agent can interact, in addition to the real environment. We use meta-learning strategies to ensure the learning process is quick to adapt to changes in the environment.

Online Risk Analysis Methods for UAV Flight Safety

The use of Unmanned Aerial Vehicles (UAVs) is increasing at unprecedented rates across a wide range of applications that include surveillance, package delivery, photography, cartography, remote sensing, agriculture, military missions, and more1. The FAA passed regulations in 2016 that authorized the commercial use of UAVs. As the adoption and use of drones increase, so does the risk of collisions and mishaps that can result in a loss of money, time, productivity, or most important, human lives. There is an abundance of research on the technical aspects of UAV systems: their design, implementation, operation, diagnostics, and stability. However, a more holistic approach to ensuring safe operations in heterogeneous airspace is required to address this multi-faceted problem that comprises of component and system diagnostics, system-level prognostics, airspace safety assurance, flight path risk assessment, as well as trajectory planning and replanning, just to name a few. Our overall goal is to support the safe operations of UAVs operating in urban environments. We are working on decision-making frameworks to maintain system safety during UAV missions (flight from a starting point to a destination going through a sequence of pre-determined waypoints) by minimizing the overall risk of mission failure, considering a number of risk factors along with uncertainties in the environment and the operating state of the vehicle.

Fault detection and isolation (FDI)

Our research is geared toward developing model- and data-driven schemes for monitoring, prediction, and diagnosis of complex dynamic continuous systems.

Model-Based Diagnosis

Our early work focused on monitoring and diagnosis from transient behaviors due to the occurrence of faults during operations of a continuous or hybrid dynamic system. Modeling nominal system behaviors and the effect of faults started from bond graph models that used a combination of physics and structure of a system to model dynamic system behavior. For efficient diagnosis, we derive a temporal causal graph (TCG) to capture dynamic system behavior and the transients in behavior when faults occur, represented as fault signatures. Behavior and diagnostic analysis are performed in a qualitative reasoning framework, and fault identification involved the use of constrained optimization methods.

In later work, we have developed structural methods for distributed fault detection and isolation in continuous and hybrid systems. Our method is based on analyzing redundant equation sets for residual generation, i.e., the use of minimal structurally-over-determined sets for diagnosis.

Contributions

- Efficient methods for diagnosis of complex, continuous dynamic systems combining qualitative and quantitative methods

- Hybrid systems diagnosis

- Developing fault detection and isolation methods for incipient faults using Dynamic Bayesian networks (DBNs)

- Distributed system diagnosis using structurally over-determined sets.

- Sensor selection methods for hybrid systems diagnosis

Data-Driven Methods for Diagnosis

Systems-Level Prognostics

System-level prognostics encompasses two distinct but related problems: (1) estimating the current system state and the degradation rates of individual components; and (2) predicting future system performance by deriving system Remaining Useful Life (RUL) functions. Nonlinearities in the system, uncertainties in the model structure and parameter values, measurement noise, and unknown environmental conditions that affect the system operations can affect the accuracy and convergence properties of the estimation and prediction tasks. We are working on developing analytic frameworks based on model-based and data-driven methods for tracking the degradation rate of individual components of a system and developing prediction methods to compute and predict the overall change in system performance and system RUL.