MELD Projects

Consortium-Wide Projects | Vanderbilt University Projects | Italian Institute of Technology Projects |

University Hospital Center and University of Lausanne Projects | Yale University Projects

Consortium-Wide Projects

Speeded Reaction Time (SRT) to Auditory, Visual, & Audiovisual Stimulation

This study investigates SRT to unisensory and multisensory inputs to investigate the development of multisensory facilitation effects in children.

Active Passive Multisensory Perception Development

Movement can alter the perception of time in a multisensory environment. However, how the movement has an influence during development was not yet investigated comparing directly active and passive movement. Here, we evaluate how the temporal binding window is altered by active and passive movement. Haptic devices will be used with this goal.

Movement on Temporal Order Judgement Video Active & Passive Audio-Tactile Binding Video

Serial Dependence Orientation Tactile Perception in Blind Individuals

This research aims to investigate how blind individuals perceive tactile stimuli and to examine whether the phenomenon of serial dependence also influences the perception of touch. Through a series of studies, this research seeks to explore whether prior tactile experiences shape the perception of subsequent stimuli, similar to the effect observed in visual perception. By analyzing these interactions, we aim to better understand how the brain processes and integrates sensory information in the absence of vision, shedding light on the mechanisms underlying sensory compensation and multisensory integration.

Serial Dependence in Multisensory Processes

It is currently unknown how performance on preceding trials influence processing of current stimulus information, particularly under multisensory conditions where stimuli can partially “repeat” for one sensory modality and also be “novel” on a given trial for another sensory modality.

Linking Low-Level Multisensory Processes to Higher-Level Cognition

Our prior work suggests links between low-level multisensory processes and higher-level cognitive (dys)functions, though this has yet to be firmly established—particularly in children. We have collected SRT data and measures of cognitive abilities (working memory, fluid intelligence) to examine these links. This project addresses a key gap by collecting data from older adolescents (ages 15–18), bridging findings from younger schoolchildren and adults. Identifying these links could inform new screening and intervention strategies.

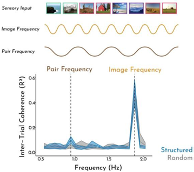

EEG Frequency Tagging Analysis Pipelines

EEG frequency tagging is a fast and efficient way to get high SNR in a short period of time. This is highly promising for use in pediatric populations. However, the analysis pipelines for this are heterogeneous and ill-defined. This project aims to generate a highly stable pipeline.

EEG Analysis Pipeline for Assessing Multisensory Processes in Children

A crucial element for the MELD consortium will be to establish a streamlined analysis pipeline for EEG data.

Standardized Multisensory Integration Analysis

A GUI that allows detailed analysis of race model violations, one of the standard tools used to assess MSI.

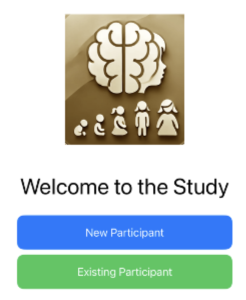

App Based Multisensory Integration Experiments

A phone app for collecting multisensory psychophysical data using standard paradigms.

Vanderbilt University Projects

Consortium Principal Investigator – Mark T. Wallace, PhD

Principal Investigator – David Tovar, MD, PhD

Vanderbilt is the primary site for the consortium, and oversees all aspects of the consortium’s work. One of the major emphasis at the Vanderbilt site will be assessing various facets of multisensory development in children from ages 5-21. In addition, Vanderbilt will serve as a focal site for examining how multisensory function differs in its development in neurodiverse populations, such as in autistic individuals.

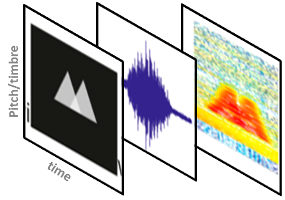

Learned and Semantic Binding Windows Across Development

Learned and Semantic Binding Windows Across Development

Semantics help us bind information, but much of this is learned. Here we create synthetic audiovisual stimuli that have experimental learned associations. We would like to see how this changes as a function of development with the idea that associations are easier to learn early in development.

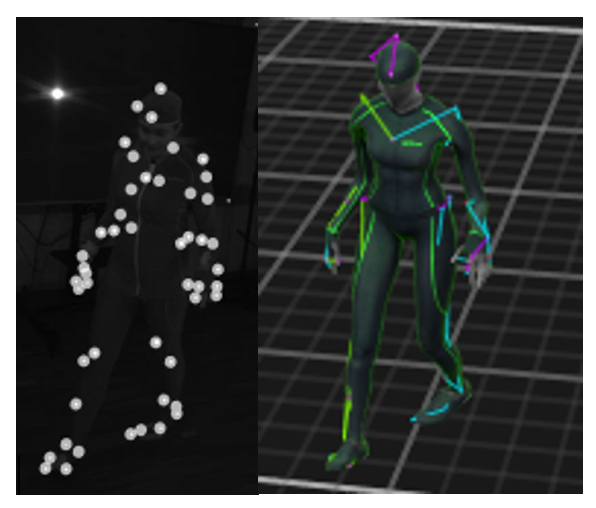

Motion Classification of Stereotypies

Individuals with autism often exhibit stereotyped movements (e.g. hand-flapping). As part of a larger project investigating the potential utility of these stereotypies, we are working on pipelines to automatically classify marker-based movement data sets.

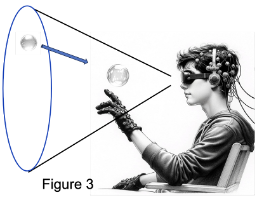

Virtual and Augmented Reality Investigations of Peripersonal Space

We are refining existing paradigms and developing a novel bubble-popping task to measure the extent and flexibility of peripersonal space. These are friendly for children and individuals with a variety of motor deficits, and will initially be used to test hypotheses about peripersonal space in individuals with autism and neurotypical.

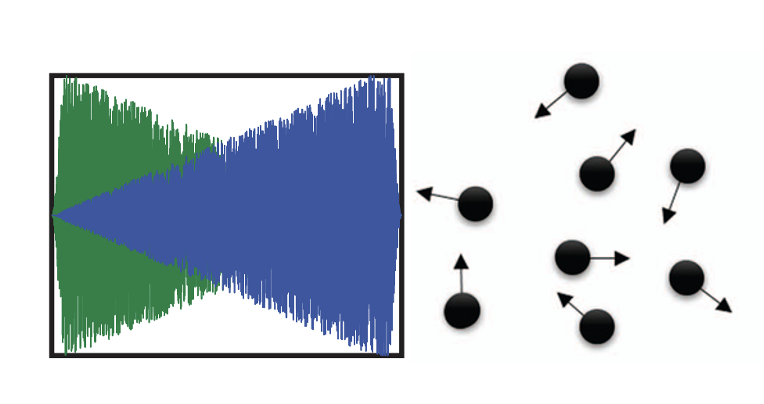

Audiovisual Motion Processing

We are developing audio and audiovisual versions of the standard visual psychophysics tool known as a Random Dot Kinetogram (RDK). This allows us to test a number of hypotheses about multisensory integration when processing moving stimuli.

Italian Institute of Technology Projects

Principal Investigator – Monica Gori, PhD

The development of multisensory skills still needs to be better understood. Our activity shows that sensory modalities’ interaction and integration are strictly interconnected. However, how these two mechanisms work at different ages considering their longitudinal development, is a mystery. Within the MELD project, we will focus on visual-tactile/haptic and audio-tactile/haptic processing considering typical and atypical sensory impaired children to determine behavioral and neurophysiological levels when and how sensory modalities scaffold multisensory integration. To do so within the consortium, we will develop simple and complex perceptual scenarios to modulate the task complexity and consider low-level and higher-level sensory processing.

Multisensory processing in VI and ADHD

Cross-modal and multisensory effects have been shown to influence perception, impacting both reaction time (RT) and event-related potentials (ERP). Oddball responses are also shaped by multisensory context. To examine how different senses affect attention, we will assess audio, visual, tactile, and multisensory oddball tasks in adults. We will then extend this to children with ADHD—where auditory oddball responses are known to differ but multisensory effects are unexplored—and to VI children, to study how MSI and attention interact across sensory contexts.

ROMAT: Visual Tactile Interaction

It has been demonstrated that both the visual and tactile systems share common mechanisms for processing motion. The goal of this research is to demonstrate that they both share similar mechanisms and also interact to create a unified perception of the world around us. We are also investigating the role of this interaction in vision loss, specifically in individuals with visual impairments, and how the system reorganizes to compensate for the lack of integration between these two senses.

Cataract and Multisensory Processing

We will use multisensory integration skills in children with congenital cataract before and after the surgery in comparison to sighted peers.

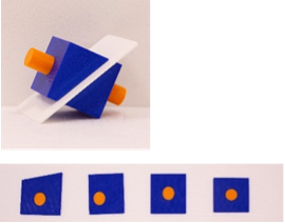

Cross-Modal Visual to Haptic Transfer

We investigate the role of vision on haptic abilities of blind children and adults. The project has the goal of using real and virtual objects to explore haptic skills and body movement in blind children and adults.

Video on Visual to Haptic Transfer

Multisensory Interaction

During every kind of interaction with the environment we intercept objects and enter in contact with them. This project wants to study interception multisensory developmental skills and their alteration in sighted, blind and VI children and adults.

Mixed Reality Validation for Psychophysics Experiments

This research focuses on using virtual and mixed reality to study human perception. These immersive environments allow us to explore how different senses interact and run psychophysical experiments. By leveraging advanced technology, we aim to better understand sensory integration and human cognition in both controlled and dynamic virtual settings.

University Hospital Center and University of Lausanne Projects

Principal Investigator – Micah Murray, PhD

The University Hospital Center and University of Lausanne (CHUV-UNIL) team will particularly focus on the role of early life experience on multisensory development, including but not limited to premature birth and schooling environment. The CHUV-UNIL team will also put particular emphasis on how multisensory processes provide the scaffolding for higher-cognitive development and thus provide a strong candidate access point for remediation and potential prevention. Finally, the CHUV-UNIL team will concentrate effort on bridging sensation, perception and resultant movement in immersive environments.

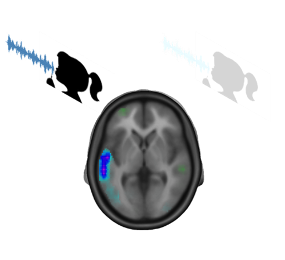

Conspecific Voice Recognition in Prematurely Born Schoolchildren

Studies show that prematurely born infants often have difficulties recognizing voices, but it is unclear whether these challenges persist into later childhood. This project investigates that question by presenting prematurely born schoolchildren with human and animal vocalizations to assess the integrity of neural mechanisms for processing human voices.

Integrity of Mirror Neuron Mechanisms in Schoolchildren

The so-called mirror neuron system can be differentially activated by sounds that have associated actions (De Lucia et al., 2010 Neuroimage). What is currently unknown is whether and when this is observable in children. This may give new access into the integrity of this system and the development of theory of mind.

Plasticity of Object Representations in Schoolchildren

Object representations of environmental sounds are subject to short-term plasticity due to repeated exposure (Murray et al., 2008 Neuroimage). This plasticity manifests behaviourally as priming and electrophysiologically as repetition suppression. We currently do not know whether and how this manifests in schoolchildren as well as in children born prematurely.

Intraindividual Variability in Multisensory Processes in Children With and Without ADHD

Children with ADHD show greater intra-individual variability, while multisensory input can reduce behavioral variability. It is unclear whether this applies to ADHD or how these children integrate multisensory information. This study uses a Go/NoGo multisensory task with EEG, actigraphy, and emotional momentary assessments (EMAs) to investigate.

Multisensory Learning of Foreign Languages

Multisensory Learning of Foreign Languages

This project examines the interplay of learning strategies and multisensory processes for foreign language acquisition in adults and children.

Influence of Montessori Pedagogy on Cognitive Control

Cognitive control—the ability to regulate thoughts and actions—supports academic success. It is often measured with brief tasks, but real life demands sustained control over longer periods. While research shows that environment shapes cognitive control, the impact of different teaching methods remains largely unknown.

Learning Strategies in Sensory Substitution

This project investigates long-held assumptions about how visual sensory information can be translated into an interpretable auditory signal for sensory substitution purposes. This is important for the goals of MELD because even children without sensory impaired may likely benefit from sensory substitution for object recognition and interactions. A first step will be to investigate this conversation in adults before assessing children.

![]()

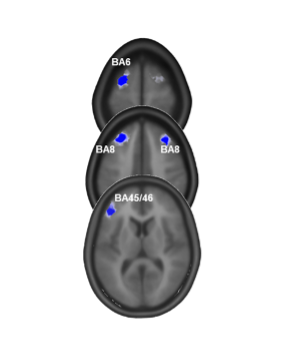

Spatio-Temporal Brain Dynamics of Sensory Substitution

It has been proposed that the brain is a sensory-independent task-relevant machine. Empirical support for this proposal largely comes from fMRI data. However, spatio-temporal brain dynamics are needed to support or refute whether a nominally visual brain region is itself the first to perform a given discrimination function.

![]()

Eye-tracking Feedback Loops for Stimulus Presentation and Control

This project focuses on setting up an eye-tracker based heat map of gaze direction to in turn control stimulus presentation. It will achieve this both in adults and subsequently in children.

Yale University Projects

Principal Investigators – David Lewkowicz, PhD, & Nick Turk-Browne, PhD

The Yale site will contribute its unique expertise and facilities by pursuing studies in infants and toddlers, in addition to young children. These include fMRI studies in awake infants and toddlers to assess multisensory integration across the early developing brain, as well as behavioral studies with eye-tracking to investigate temporal binding and perceptual segregation of audiovisual speech.

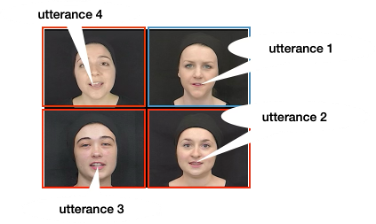

Multisensory Cocktail Party Problem in Children: Effects of Facial Configuration and Linguistic Cues on Perceptual Segregation

This study investigates the effects of multisensory clutter on perception and cognition in young children. The current study examines children’s responsiveness to multiple talking faces and the effects facial configuration cues, as well as A-V synchrony, visual and acoustic identity, and linguistic cues on segregation of multiple talking faces.

Multisensory Cocktail Party Problem in Children: Effects of Early Face & Linguistic Experience on Perceptual Segregation

Segregation of multisensory clutter depends on familiarity with talking faces. Here, we investigate whether segregation of other-race (Asian) faces speaking in an unfamiliar language (Mandarin) affects White-Caucasian, English-learning children’s ability to perceptually segregate them.

Multisensory Cocktail Party Problem in Infants

Integration of auditory and visual speech cues develops rapidly in infancy, but prior studies have focused only on isolated syllables, limiting their generalizability. Using our multisensory cocktail party paradigm, we examine infants’ integration of fluent audiovisual speech and how multisensory clutter affects this ability.

Neural Processing of Audiovisual Synchrony in Infants and Children

Here we are leveraging state-of-the-art fMRI techniques and intersubject correlation analyses to study the early development of how audio-visual temporal synchrony affects multisensory integration across the brain during naturalistic perception.

Multisensory Integration in Memory Systems

The goal of this fMRI project in adults is to examine the integration of multisensory signals in the hippocampus, a major convergence zone in the human brain that receives inputs from all sensory systems and that encodes memories of multisensory events.

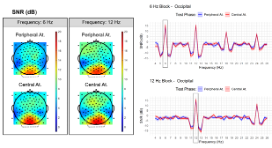

The Use of EEG Frequency Tagging to Extract Separate Measures of Overt and Covert Attention

Using EEG frequency tagging, we have developed a method for rapidly extracting separate measures of overt and covert attention from the brain’s response to visual information. Findings indicate that covert attention reduces overt attention when the two compete. Our plan is to adapt this technique for the study of multisensory overt and covert attention.