Projects

UAV System-Wide Safety

Unmanned aerial vehicles (UAVs) are increasingly used in applications like delivery, search and rescue, and agriculture. Due to their small size and weight, they are heavily impacted by disturbances like winds or faults. As a part of a NASA-funded System-Wide Safety project, we are improving the safety of UAVs. There are three major phases to this project.

- Octorotor Simulation: We developed open-source Python and Matlab simulations of an octorotor UAV. Our simulators allow for low-level control of the UAV and customization over the wind and fault conditions. The Python version of this simulation is available at https://github.com/hazrmard/multirotor. The Matlab version will be available soon.

- Reinforcement Learning Disturbance Rejection Control: We developed a UAV controller that can reduce the deviation from the flight plan under extreme wind and fault conditions. Our controller uses a reinforcement learning agent to modify the reference velocity fed to a low-level cascade PID controller. We applied this controller to our simulation and real flights at MIT Lincoln Lab. See the video below for a demonstration of our controller under extreme (12 m/s) north wind in simulation.

- UAV System-level Prognostics: Over the lifetime of a UAV, components like motors and the battery degrade. This can lead to faults and a loss of system-level performance. To address this, we generated a dataset of UAVs across their lifetime where we modeled the degradation of motor and battery parameters. We used this to develop a data-driven system-level prognostics model that can estimate the remaining useful life of a UAV. The dataset and paper detailing our approach will be released soon.

Selected Publications

Coursey, A., Zhang, A., Quinones-Grueiro, M., and Biswas, G., “Hybrid Control Framework of UAVs Under Varying Wind and Payload Conditions,” 2024 American Control Conference (ACC), 2024.

Coursey, Austin, Marcos Quinones-Grueiro, and Gautam Biswas. “An Experimental Framework for Evaluating the Safety and Robustness of UAV Controllers.” AIAA AVIATION FORUM AND ASCEND 2024. 2024.

Coursey, Austin, Marcos Quinones-Grueiro, and Gautam Biswas. “On Learning Data-Driven Models For In-Flight Drone Battery Discharge Estimation From Real Data.” 2023 IEEE International Conference on Smart Computing (SMARTCOMP). IEEE, 2023.

Data-driven System-level Prognostics

As systems and devices are used, their components degrade. Their remaining useful life decreases with each use. Estimating this remaining useful life is a main task in prognostics. Data-driven approaches for prognostics typically predict the remaining useful life at the component level. In this work, we estimate the system-level remaining useful life using purely data-driven methods. Our methods are inspired by model-based techniques, and we apply them to unmanned aerial vehicles.

An example of our recently developed approach applied to an aircraft engine dataset can be seen in the video below. In this approach, we estimate the system performance and the remaining useful life. As the engine approaches the end of life, our performance estimates get more accurate and confident, leading to nearly perfect remaining useful life estimations.

Selected Publications

Diaz-Gonzalez, Abel, Austin Coursey, Marcos Quinones-Grueiro, and Gautam Biswas. “A Flexible Data-Driven Prognostics Model Using System Performance Metrics.” IFAC-PapersOnLine 58, no. 4 (2024): 222-227.

Diaz-Gonzalez, Abel, Austin Coursey, Marcos Quinones-Grueiro, Chetan S. Kulkarni, and Gautam Biswas. “Data-Driven RUL Prediction Using Performance Metrics (Short Paper).” In 35th International Conference on Principles of Diagnosis and Resilient Systems (DX 2024), pp. 21-1. Schloss Dagstuhl–Leibniz-Zentrum für Informatik, 2024.

Diaz-Gonzalez, Abel, Austin Coursey, Marcos Quinones-Grueiro, and Gautam Biswas. “A Data-Driven Particle Filter Approach for System-Level Prediction of Remaining Useful Life.” In 36th International Conference on Principles of Diagnosis and Resilient Systems (DX 2025), pp. 11-1. Schloss Dagstuhl–Leibniz-Zentrum für Informatik, 2025.

Safe Continual Reinforcement Learning

As our society becomes more automated, we have a growing need for autonomous agents that can operate for long periods. However, real-world environments are often non-stationary, meaning they change over time. To address this, agents need to continually adapt while retaining previously learned knowledge. The field of continual reinforcement learning attempts to address this problem, developing agents that continue to learn over their lifetime. However, the safety of these lifelong adaptations is unclear. In this project, we aim to determine the safety of continual reinforcement learning and introduce methods for lifelong learning that do not risk the safety of the agent.

Selected Publications

A. Coursey, M. Quinones-Grueiro and G. Biswas, “On the Design of Safe Continual RL Methods for Control of Nonlinear Systems,” 2025 European Control Conference (ECC), Thessaloniki, Greece, 2025, pp. 892-897, doi: 10.23919/ECC65951.2025.11187149.

Past Projects

The goal of this project is to support safe operations of unmanned aerial vehicles (UAVs) for urban mobility. UAVs operations present hazards to other airspace users and people and property on the ground, and monitoring, analysis, and control schemas need to be developed to ensure that the risks attributed to these hazards are managed to acceptable levels. We are working in the design of algorithms and corresponding computational architectures that are directed to minimize risk in flight (i.e., maximize safety under the given operating conditions), while also performing degradation monitoring to support system-level prognostics and track UAV performance during a mission. Path planning algorithms that perform risk analysis conducted on projected UAV flight paths enable their safe operations. However, most approaches ignore the fact that the vehicle’s operating conditions can change during flight, and this makes the flight unsafe. Online monitoring of the UAV enables system‐level prognostics that combine the effects of multiple degrading components to determine how system functionality and performance are affected over the flight trajectory. Taking into account the degrading state of the UAV and the changes caused by environmental parameters, we propose a path planning approach to compute the mission trajectory using an online risk analysis methodology. The goal of the proposed approach is to adjust mission trajectory as necessary to reduce risks and maintain overall system safety under current and predicted environmental conditions. Selected Publications M. Quiñones-Grueiro, T. Darrah, G. Biswas, and C. Kulkarni “A Decision-Making Framework for Safe Operations of Unmanned Aerial Vehicles in Urban Environments”, PHM Society Conference, 2020 (preprint). Darrah, T., Kulkarni, C., Biswas, G. (2020). The Effects of Component Degradation on System-Level Prognostics for the Electric Powertrain System of UAVs. AIAA Scitech Forum.

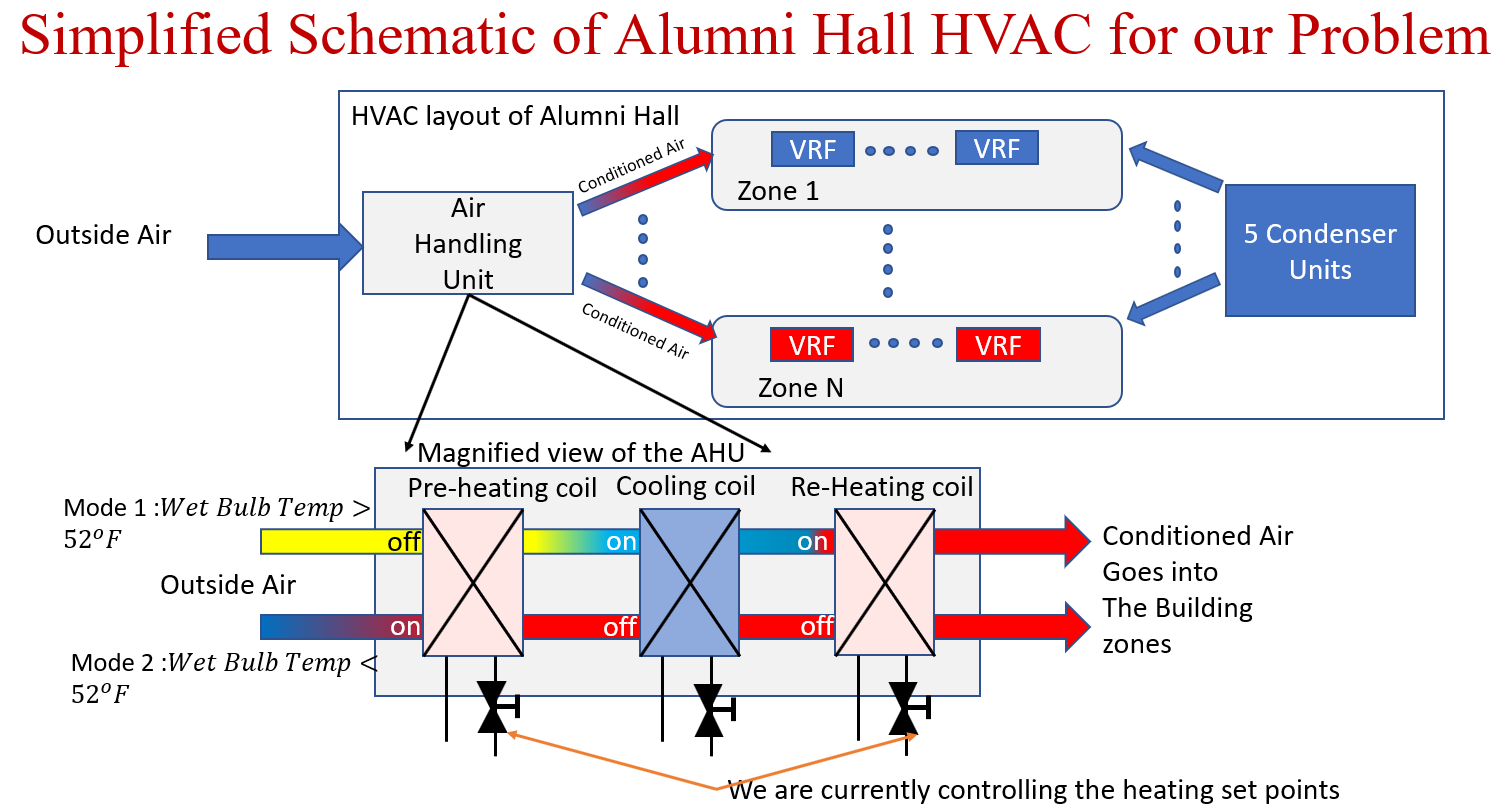

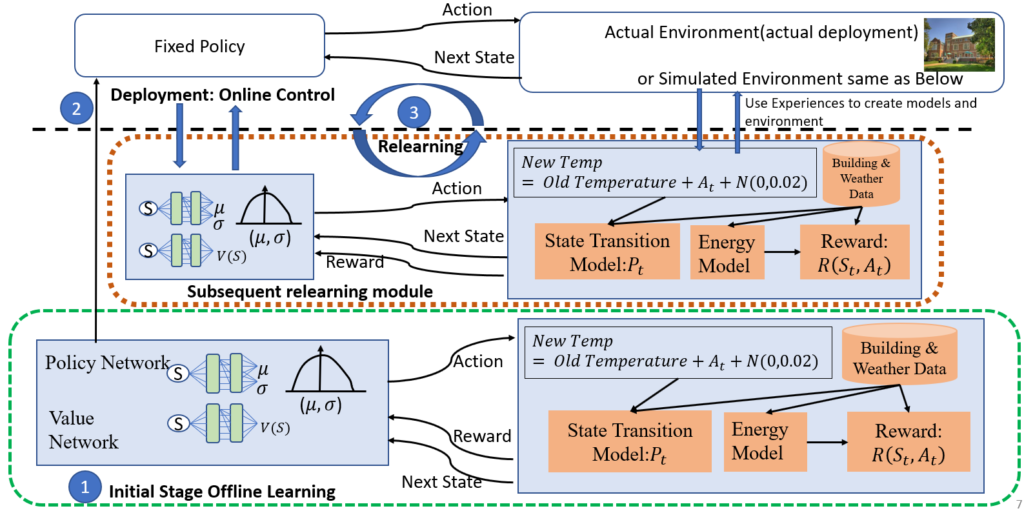

We demonstrate the relearning for continuous adaptation approach on a data-driven simulated model of a real HVAC system on our university campus. Since the data-driven simulated system needs to be updated with time, we also implement a relearning schedule where the simulated system is updated based on either of the periodic or trigger-based updates. We employ Deep LSTMs to model the heating energy, cooling energy, valve behavior, etc for the simulated setup. These models are trained on historical data from the real HVAC system. To develop the controllers, we are currently using the PPO algorithm which appears to be the State of the Art in solving benchmark RL tasks. PPO is a form of Policy gradient algorithm where we create a deep Policy Network which is the controller and a deep Value Network which evaluates the controller’s actions. These are trained using batches of experiences generated by the Policy Network and the simulated Non-Stationary System. The policy gradient algorithm is used to update the policy network at every training/update step. The reward signal is used to decide the quality of actions taken by the controller in the batches of experiences. For the HVAC, the reward signal incentivizes energy savings and comfort when it is controlled by supervisory actions from the RL controller. Thus our approach can be termed as a “Data Drive Deep Reinforcement Learning Approach” where we use deep neural networks to learn features and subsequently patterns in the HVAC behavior as well as create controller networks for optimizing energy and comfort.

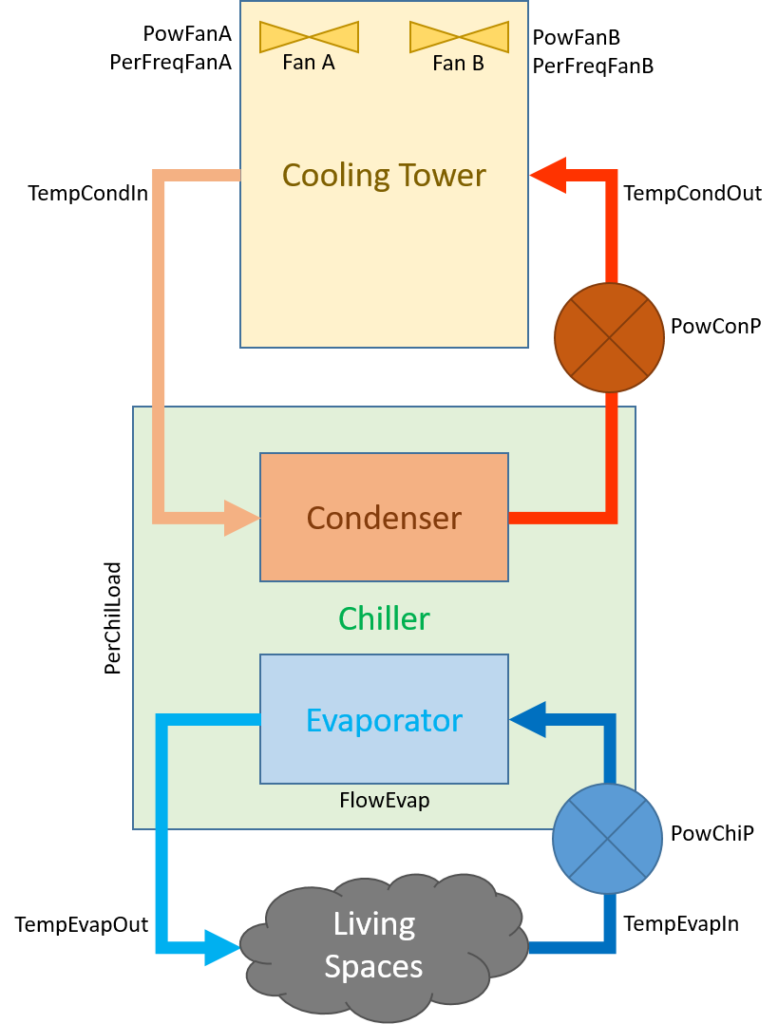

Heating Ventilation and Air Conditioning systems (HVACs) are used to control temperature and humidity inside buildings. They use cooling towers at one end to expel excess heat from the refrigerant into the atmosphere. The heat is expelled through evaporative cooling. The rate of cooling depends on the cooling tower surface area, humidity, temperature, and speed of water and air. An optimal controller will set fan speeds and output water temperature set-points such that they are physically possible, save energy. It will also try to maintain occupant comfort, making this a multi-objective optimization problem. We use Reinforcement Learning to train controllers that can learn and operate across changing weather conditions while saving energy. The code and data for this system can be found here.

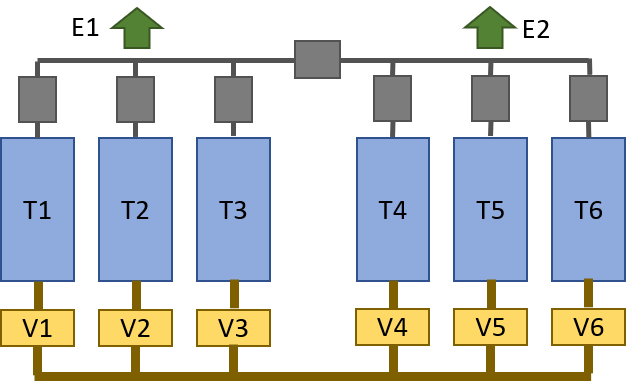

This is a simplified model of a fuel transfer system in an aircraft. There are 6 fuel tanks. Each tank is connected to a shared conduit via valves (V*). Once the valves are open, fuel can flow between tanks under gravity. The tanks are also connected to engines via pumps. Pumps drain fuel from the tanks under a prescribed schedule. The tanks are drained innermost first. Faults in the systems can be changes in engine fuel demands, pump capacities, valve resistances etc. Faults can be gradual or abrupt. We frame this as a reinforcement learning problem. This requires formulation of a reward function, data-driven models, and appropriate choice of algorithm that can adapt fast to faults. The code for this system can be found here. Selected Publications I. Ahmed, H. Khorasgani, and G. Biswas, “Comparison of Model Predictive and Reinforcement Learning Methods for Fault Tolerant Control,” IFAC-PapersOnLine, vol. 51, no. 24, pp. 233–240, 2018. I. Ahmed, M. Quiñones-Grueiro, and G. Biswas, “Fault-Tolerant Control of Degrading Systems with On-Policy Reinforcement Learning,” IFAC-PapersOnLine, 2020. I. Ahmed, M. Quiñones-Grueiro, and G. Biswas, “Complementary Meta-Reinforcement Learning for Fault-Adaptive Control,” PHM Society Conference, 2020.

This is a multi-year STTR project in collaboration with Qualtech Systems, Inc, to develop an advanced health management system for Navy patrol boats. System health monitoring and management helps improve mission readiness and success. In long missions, or, in missions performed in harsh environments, Health Management Systems (HMS) may spell the difference between successful mission completion and failure. Health monitoring includes diagnosis and prognosis to assess a system’s health, and how it may change as future missions are conducted. Diagnosis is an assessment about the current and past health of a system based on observed symptoms, sensor data and built-in-tests, and prognosis is an assessment of precursor events or degradations that may affect the system health over a specified time interval. Such degradation assessment may drive intelligent maintenance, or, be used during operations to manage health and defer or prevent failure during a mission. Thus, a HMS is the key to condition-based maintenance (CBM) and critical for improving safety, planning missions, scheduling maintenance, and reducing maintenance costs and down time. The computational architecture is shown below in figure 1.Navy Combatant Craft Health Management System