Projects

Engage AI Institute

The National Science Foundation AI Institute for Engaged Learning (Engage AI Institute) is guided by a vision that supports and extends the capabilities of teachers and students with artificial intelligence (AI). Their mission is to produce transformative advances in STEM teaching and learning with AI-driven narrative-centered learning environments. The Engage AI Institute conducts research on narrative-centered learning technologies, embodied conversational agents, and multimodal learning analytics to create deeply engaging collaborative story-based learning experiences. They aim for the AI-driven learning environments to be built on advances in natural language processing, computer vision, and machine learning. Their vision is informed by a nexus of stakeholders to ensure that AI-empowered learning technologies are ethically designed and prioritize diversity, equity, and inclusion.

The National Science Foundation AI Institute for Engaged Learning (Engage AI Institute) is guided by a vision that supports and extends the capabilities of teachers and students with artificial intelligence (AI). Their mission is to produce transformative advances in STEM teaching and learning with AI-driven narrative-centered learning environments. The Engage AI Institute conducts research on narrative-centered learning technologies, embodied conversational agents, and multimodal learning analytics to create deeply engaging collaborative story-based learning experiences. They aim for the AI-driven learning environments to be built on advances in natural language processing, computer vision, and machine learning. Their vision is informed by a nexus of stakeholders to ensure that AI-empowered learning technologies are ethically designed and prioritize diversity, equity, and inclusion.

Simulation-Based Learning in Nursing

This project brings together Peabody College, the College of Engineering, and the School of Nursing. Together, we have developed data collection procedures, created technical resources, and run pilot studies on the nursing simulations taking place at the School of Nursing’s Skills and Simulation Lab. A key element of the project is that nursing students are fitted with head-mounted Tobii Pro eye-tracking units that record eye gaze during simulations. Analyses of the simulations includes artificial intelligence multimodal data fusion, which tracks motion, discussion, and eye gaze during these clinical scenarios.

The team is comprised of Dan Levin (Department of Psychology and Human Development at Peabody College), Gautam Biswas (College of Engineering), Mary Ann Jessee (School of Nursing), Jo Ellen Holt and Eric Hall (Skills and Simulation Lab), as well as graduate and undergraduate students from both Peabody College and the College of Engineering. Key students are Madison Lee, Caleb Vatral, Clayton Cohn, and Eduardo Davalos Anaya.

Multimodal Learning Data Pipeline

In addition to our on-line response and feedback system, we are developing a broader foundation for multimodal analytics that will be applicable to a broad range of simulation-based learning studies. This system will support pipelines that include data-intensive uploading, archiving, analysis, and display. We have working prototypes of several parts of this system.

Digital Reading

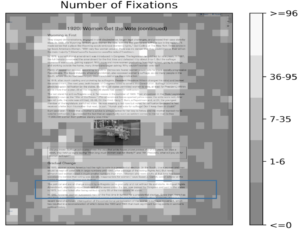

Dr. Goodwin and Dr. Biswas from the LIVE faculty are applying a multimodal analytic approach to studies comparing digital- and paper-based reading. They are also leveraging our multimodal analysis pipeline alongside head-mounted eye-tracking hardware for use with elementary school children.

A key element of LIVE’s support in this project is incorporating the LIVE head-mounted eye trackers as a means of enhancing the multimodal analytics that create rich records of elementary school students as they read digital texts. These eye-tracking units are combined with webcam videos that are analyzed for students’ cognitive and emotional states while they read. A key advantage of these eye-tracking devices is that they allow gaze to be contextualized during reading by revealing looking behavior that may extend beyond the screen, and they also allow comparisons between digital reading and traditional paper-based reading.

Computer Science Frontiers (CSF)

There is a need to dramatically expand access, especially for high school girls, to the most exciting and emerging frontiers of computing, such as distributed computation, the internet of things (IoT), cybersecurity, and machine learning, as well as other 21st century skills required to productively leverage computational methods and tools in virtually every profession.

To achieve this goal, Dr. Akos Ledeczi and his team is involved in the design of a new, modular, open-access curriculum called Computer Science Frontiers (CSF) that provides an engaging introduction to these advanced topics in high school (that are currently accessible only to CS majors in college)

The curriculum leverages NetsBlox and consists of four 9-week modules. Each of the modules will be designed as a series of units, with each unit comprising 3-5 hours of instruction. Each unit will consist of an opener introductory activity for context and motivation, and a series of activities specifically designed to scaffold learning and minimize cognitive load especially for novice learners. The four modules are:

- Distributed Computing

- Internet of Things and Cybersecurity

- AI and Machine learning

- Software Engineering/Entrepreneurship

To learn more about the curriculum, click here

The curriculum is currently being piloted as a full-year course in Martin Luther King High School in Nashville.